For military forces, AI is a case of ‘coming, ready or not’. Developed to meet commercial imperatives, the technology is rapidly maturing. It is now up to militaries to gain the best advantage from it.

AI technology is suddenly important to military forces. Not yet an arms race, today’s competition is more an experimentation race with a plethora of AI systems being tested and research centres established. The country that first understands AI adequately enough to change its existing human-centred force structures and embrace AI warfighting may gain a considerable advantage.

In a new paper for the Australian government, I explore sea, land and air operational concepts appropriate to fighting near-to-medium term future AI-enabled wars. This temporal proximity makes this less of a speculative exercise than might be assumed. In addition, the nature of contemporary ‘narrow’ AI means its initial employment will be within existing operational level constructs, not wholly new ones.

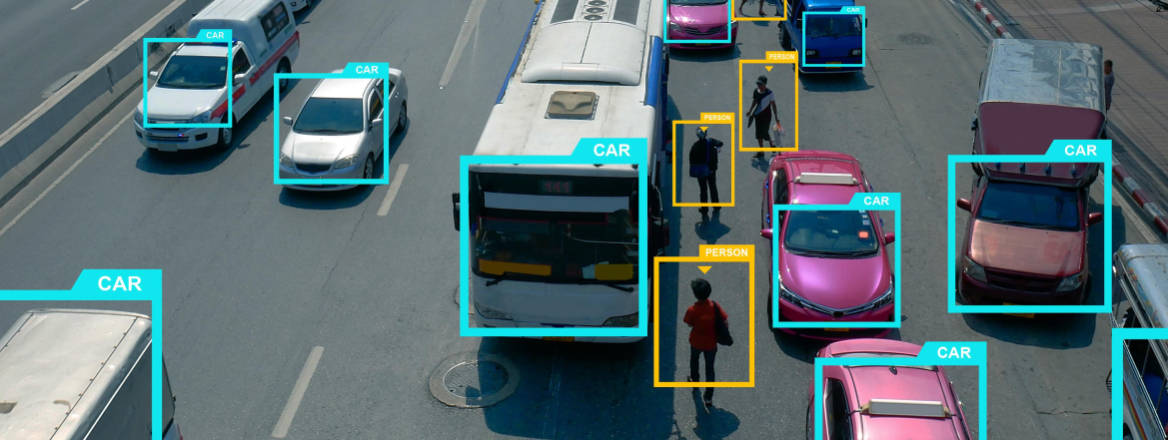

AI allows machines to accomplish their tasks through reasoning, not set mechanical responses. In the nearer term, AI’s principal attraction will be its ability to quickly identify patterns and detect items hidden within very large data troves. While also giving mobile systems a new autonomy, the principal significance of this characteristic is that AI will make it much easier to sense, localise and identity objects across the battlespace. Hiding will become increasingly difficult. However, AI is not perfect. It has well known problems in being brittle, able to be fooled, unable to transfer knowledge gained in one task to another and data dependence.

AI’s main warfighting utility consequently appears to be ‘find and fool’. AI is excellent at finding items concealed within a high clutter background. In this role, AI is better than humans and much faster. On the other hand, AI can be comprehensively fooled through various means. AI’s great ‘find’ capabilities lack robustness. The future AI-enabled battlespace might be more evolutionary than revolutionary.

A future battlespace might feature hundreds, possibly thousands, of small-to-medium stationary and mobile AI-enabled surveillance and reconnaissance systems operating across all domains. Simultaneously, there may be an equivalent number of jamming and deception systems acting in concert to create a false and deliberately misleading impression of the battlefield. Both sides might find it difficult to accurately attack when there are thousands of seemingly valid targets, only a small number of which are actually real.

On such a battlefield, the balance of power might be replaced by a balance of confusion. AI may offer the promise of high-speed hyperwars but that assumes a perfection in targeting that AI might also negate. It will take time to ascertain where the friendly and adversary forces intermingled across the battlespace really are. Engagements might be spasm-like, with nothing happening for an extended period as the tactical picture is developed, then a short sharp exchange of fire, with a new situation then created.

Accordingly, AI-enabled autonomous weapon systems (AWS) might be less important than some fear. Narrow AI technology already has technical shortcomings that constrain its utility. Significantly worsening these problems by saturating the battlespace with AI systems designed to fool AWS-like machines would mean they will require continual human checking to ensure they are operating correctly. Thus, in terms of utility, more may be gained by using unarmed AI-enabled systems. These could operate autonomously, undertake a range of functions as they roam across the battlespace, and be unconstrained by law of armed conflict lethal force concerns or worries over connectivity with distant human operators.

These worries over AI’s inherent unreliability highlight that for practical purposes AI should be teamed with humans. Poorly performing AI-enabled systems will bring limited military usefulness irrespective of law of war and ethical issues. The upside to this is that the strengths of AI then counterbalance the weakness in human cognition and vice versa. World chess champion Garry Kasparov observed of chess playing human–machine teams: ‘Teams of human plus machine dominated even the strongest computers. Human strategic guidance combined with the tactical acuity of a computer was overwhelming. We could concentrate on strategic planning instead of spending so much time on calculations. Human creativity was even more paramount under these conditions’.

This observation suggests the Observe–Orient–Decide–Act model of decision-making may need changes. Under this model an observation cannot be made until after the event has occurred; the model inherently looks backwards in time. AI could bring a subtle shift. Given suitable digital models and adequate ‘find’ data, AI could predict the range of future actions an adversary could conceivably take and, from this, the actions the friendly force might take to counter these.

An AI-enabled decision-making model might be ‘sense–predict–agree–act’: AI senses the environment to find adversary and friendly forces; predicts what adversary forces might do and advises on the friendly force response; the human part of the human–machine team agrees; and AI acts by sending machine-to-machine instructions to the diverse array of AI-enabled systems deployed en masse across the battlefield.

AI appears likely to be the modern ‘ghost in the machine’ infusing many, perhaps most, military machines. Such diffusion means the impact of AI in warfighting cannot be judged through assessing individual machines but rather in how large numbers of heterogenous AI-enabled systems might simultaneously interact. This could make the broad shape and pace of future warfighting more like today’s than is presently anticipated, even if some unexpected wrinkles emerge as reasoning machines proliferate across the battlefield.

The views expressed in this Commentary are the author's, and do not represent those of RUSI or any other institution.

WRITTEN BY

Dr Peter Layton

RUSI Associate Fellow, Military Sciences